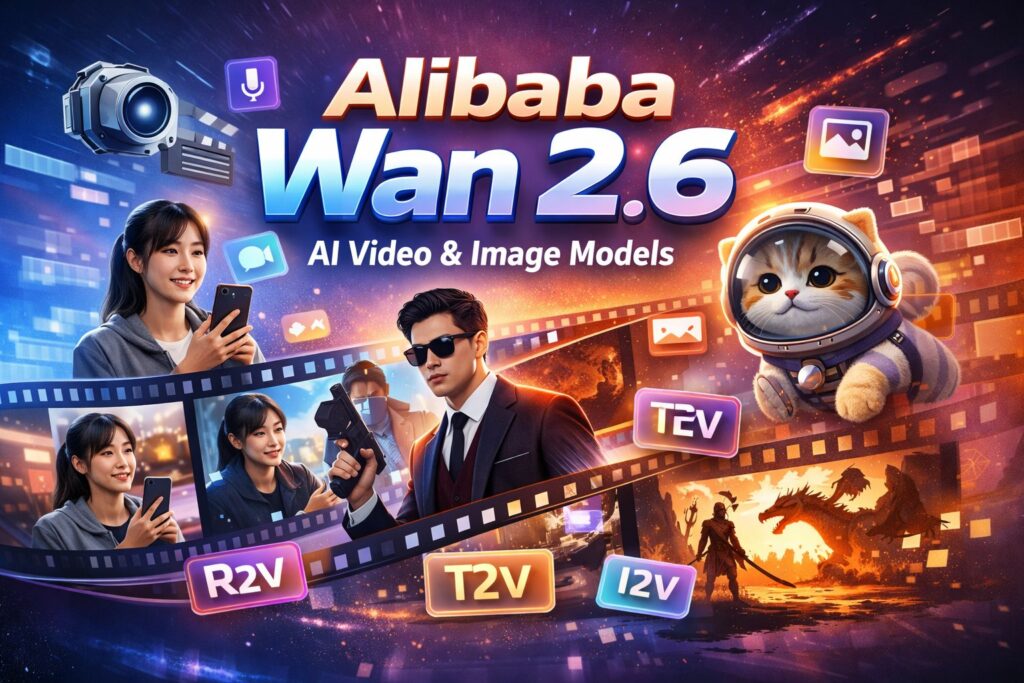

Alibaba has introduced Wan 2.6, the latest version of its AI-powered visual generation models, designed to make professional-quality video creation more accessible to creators worldwide. The new release focuses on one major shift: allowing users to appear in AI-generated videos as themselves—complete with their own appearance and voice—while maintaining consistent visuals across multiple scenes.

Unveiled in Hangzhou, China, the Wan 2.6 series brings upgrades across Alibaba’s text-to-video, image-to-video, and image generation models, with new tools aimed at longer, more coherent storytelling and improved audio-visual realism.

Reference-to-Video: Putting Yourself Into AI-Generated Scenes

The standout feature of Wan 2.6 is Wan2.6-R2V, a new reference-to-video generation model. Users can upload a short reference video of a person, animal, or object, including both visual appearance and voice. Using text prompts, the model then generates entirely new scenes starring that same subject, while preserving their look, voice, and overall identity.

This allows creators to insert themselves—or other characters—directly into AI-generated content without losing consistency. Multiple subjects can appear in a single video, making it suitable for dialogue-driven scenes, short dramas, and narrative storytelling.

Alibaba says this is the first reference-to-video model of its kind in China, and it is expected to significantly reduce production time for short-form video creators who previously needed repeated shoots, voiceovers, or manual editing to achieve similar results.

Smarter Multi-Shot Storytelling and Better Audio Sync

Beyond reference-based video generation, Wan 2.6 introduces intelligent multi-shot storytelling, enabling creators to build videos with several connected scenes while maintaining visual and character consistency from start to finish.

Audio-visual synchronization has also been improved. The updated models deliver more accurate lip movement, better alignment between sound and motion, and richer sound effects through enhanced audio-to-video generation. These upgrades help scenes feel more natural and immersive, particularly in dialogue-heavy content.

Video output now supports durations of up to 15 seconds, giving creators more flexibility to develop ideas without compressing everything into ultra-short clips. Combined with improved instruction-following accuracy, the models respond more precisely to detailed prompts, resulting in cleaner visuals and more predictable outcomes.

Expanded Image Generation With Stronger Creative Control

Wan 2.6 also upgrades Alibaba’s image generation tools, including Wan2.6-image and Wan2.6-T2I. These models support interleaved text and image outputs, allowing creators to build visuals step-by-step while maintaining narrative coherence.

The image models show stronger logical reasoning, improved artistic style control, and high-fidelity portrait generation. They also support detailed image editing and can handle long, complex prompts in both Chinese and English, making them suitable for design, illustration, and concept development work.

According to Alibaba, these improvements are aimed at helping creators translate nuanced ideas into visuals without sacrificing accuracy or artistic intent.

Where Creators Can Access Wan 2.6

Developers and creators can access the Wan 2.6 models through Alibaba Cloud’s Model Studio, as well as via Wan’s official website. Alibaba also plans to integrate the models into the Qwen App, its flagship AI application, expanding access for everyday users.

First introduced earlier this year, the Wan series has been updated continuously, with Wan 2.6 representing its most comprehensive upgrade to date. The release underscores Alibaba’s growing focus on AI-driven multimedia tools that support both creative expression and real-world production workflows.

For content creators, filmmakers, marketers, and developers, Wan 2.6 positions itself as a practical step toward faster, more flexible, and more personalized AI-generated video and image creation.